2023 has seen an explosion in the buzz of new developments and discourse surrounding all things to do with Artificial Intelligence.

After the recent hype surrounding the Metaverse and NFTs it can be tempting to dismiss all the talk around AI as just the latest passing gimmick, but when you cut through the distracting noise – and centre in on the specific products of AI – its clear we are facing a significant new era of technological progress.

In this series of posts I’m attempting to take stock of what I know, what I don’t know, what I think, and what I can only speculate about in relation to advancements in AI and what this means for my world: its impact on data visualisation.

I’ve been delaying writing this piece for months. The pace of change is so rapid, as is the expansion in the accompanying discourse and amount of reading to try learn from. There’s frankly too much to comprehend, but rather than delay further and wait for an elusive moment where I feel ‘on top’ of this subject (which will never arrive), its time to go ahead and publish.

To establish clarity in your conviction of what you know and don’t know about a subject, I always feel you can start by challenging yourself to write something about it for others. Having had a lot of discussions with colleagues in the field at various points over the year, I get the clear sense that there are lots of us trying to make sense of all this and probably struggling to easily box it all up into clear containers of understanding.

I see these posts as being akin to sharing my notes, passing on my bookmarks, and generally lowering the rope ladder to help others ascend to the next level of awareness, even if that is still somewhat scattered and rudimentary. I would expect some of this will be either obsolete, out-dated, or missing whole swathes of relevant references within months, as developments continue. But it can also serve to draw a line in time and say this is what I was thinking about now.

This post was a one-parter, then became a four-parter, then eventually back to two. There’s a fair amount of stuff to wade through here but it feels simpler to bundle it into two distinct companion pieces. Across each part there are several distinct headings as I attempt to bring some coherence and organisation to this wide-ranging topic:

In PART 1 I attempt to make general sense of the topic of Artificial Intelligence, presents the emerging common generative AI products, offers a further collection of somewhat-diverse general reading references about the subject that I’ve found helpful, and closes by highlights some of the techno-ethical issues and risks being raised.

In PART 2 I look at the subject more specifically through the lens of data visualisation, as I consider how AI could be applied or could be beneficial to any of the different discrete tasks that go into the preparation, conceiving, and production of a data visualisation solution. This section also includes a (current) collection of some of the data visualisation tools that have launched with explicit AI integrations.

The choice of featured image for this article portrays Otto, the inflatable autopilot seen in the 1980 classic movie Airplane. It captures my summary feeling that the role of AI in data visualisation will be (should be) to offer smart assistance on objective, repetitive, or complicated tasks that then better enable human creators to focus their energy on tasks that are inherently subjective and/or creative.

PART 1

Understanding Artificial Intelligence

At times I’ve struggled to fully grasp many aspects that surround or branch out of discussions around AI. I say ‘fully’ grasp because there are moments when you feel something makes sense, and then along comes an alternative or even contradictory view point and the clarity becomes clouded.

A typical definition I’ve seen for Artificial Intelligence is “a machine’s ability to perform the cognitive functions we usually associate with human minds, such as perceiving, reasoning, learning, interacting with an environment, problem solving, and even exercising creativity“.

That seems to capture things in a reasonably understandable way but it is the definitions beneath this overarching term I’ve often found to obscure my understanding. There seem to be many distinctions in terminology been made by commentators around some of the products or applications of Artificial Intelligence. This includes things such as, but not limited to, Machine Learning (ML), Large Language Models (LLM), and Generative AI (“GenAI”).

For the context of this article being about the impact of AI on data visualisation I feel Generative AI is likely the most relevant label for the types of AI products and/or applications of AI that will offer useful assistance to our practice.

GenAI is sometimes referred to as being any artificial intelligence application that can generate content, such as text, images, or other media. Such GenAI tools or systems generate their outputs based on natural language prompts as inputs, and these include the high-profile LLMs like ChatGPT and image generating systems such like Midjourney and DALL-E 2. Some definitions of GenAI include the prospect of them ‘creating original content’ but I’m not sure that notion of creating (vs. generating) nor original are reasonable labels to describe the content these systems produce.

For further reading on definitions, rather than just regurgitate a big list of proposed terms, let me direct you to a couple of comprehensive glossaries from the Financial Times and the Council of Europe.

The other defining thing I have struggled with is the distinction between technology that is authentically AI and general computing technology that seems to help automate the process of doing tasks but isn’t branded as AI.

The common contrast would appear to be, in broad terms, that non-AI computing technologies are classified as programmes that undertake problem-solving tasks efficiently whereas AI does this and enables computers to learn and think with a degree of intelligence, adapting responses or approaches to such tasks through new information and/or user prompting.

That still leaves some cloudiness about whether features such as a spell checker in Word is AI? Is an adjustment filter in Photoshop, AI? Is the ‘show me’ chart suggestion aid in Tableau, AI? More broadly, is the ‘those who bought that, also bought this’ feature on shopping sites like Amazon considered AI?

Although these are all clever and useful features, I feel like only the latter of those would be authentically considered AI given its response will be constantly refining as the data that feeds its algorithm grows.

Generative AI products

They are growing in number, and in their application, by the week, but this is a simple collection of links to some of the most common GenAI products.

ChatGPT from OpenAI

Bard from Google

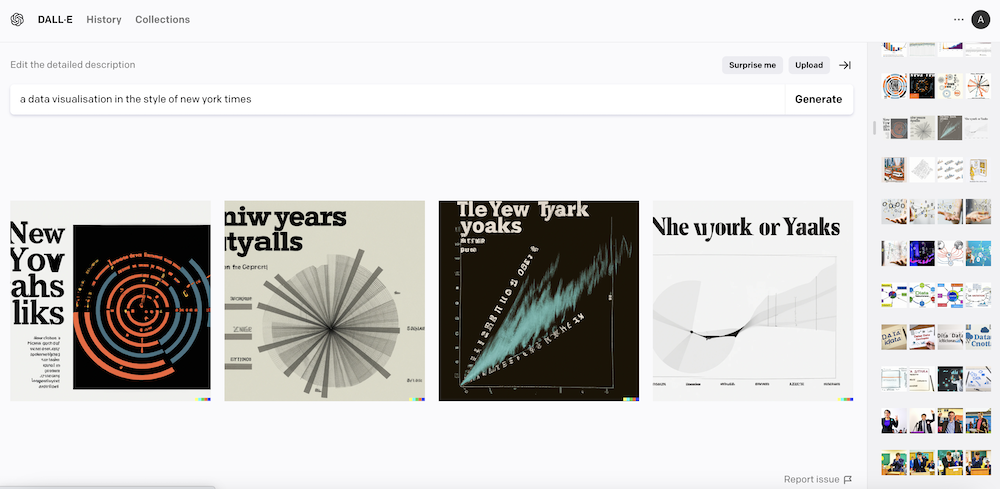

DALL-E 3 by OpenAI

Image Creator by Microsoft

Further general reading about AI

This is a collection of links to articles and talks about Artificial Intelligence that I’ve found to be helpful, both for broader understanding as well as relevance to thinking about its impact on data visualisation.

Scientific American | ‘See How AI Generates Images from Text’

From Jen Christiansen “I learned a lot about the history and development of AI and machine learning while doing research/story development for this piece in the Oct 2023 issue of Scientific American. It’s fascinating, thought provoking, and yes – even a little bit scary!“

Financial Times | ‘Generative AI exists because of the transformer’

One Useful Thing | ‘How to Use AI to Do Stuff: An Opinionated Guide’

This American Life | [Podcast] ‘803: Greetings, People Of Earth’

The Verge | ‘Hope, fear, and AI’

What are the concerns and risks?

In the initial bloom of any novel technologies, there is so much discussion and debate about the potential opportunities and benefits it offers. What has strongly characterised the discourse around AI has been an equal amount of coverage about the risks, the uncertainties, and indeed threats.

The first and most widely elevated concern about the risk of AI has been the not-so trivial matter of it potentially leading to the end of humanity. “Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war” is quite a thing to see in this statement from the non-profit Centre for AI Safety and signed by hundreds of notable researchers and engineers. It would be really annoying if that happened, obviously.

I understand the rationale behind why people talk about this, because a quick thought-experiment can quickly take us on an imagined journey from a fun, dumb image-making tool today to a self-aware machine that rightly decides to initiate actions to eliminate the ongoing human causing influence behind human-caused climate change…

I think it would be helpful if more qualified and informed people would spend less time giving eye-rolling responses to the suggested threats and offer more constructive explanations of why they think talk of existential threat is over-blown. As this thread usefully explains AI hype is probably hype and AI ‘doomerism’ is also hype.

In this article from Scientific American, and a similar piece in The Guardian, the focus is less on the naval-gazing and more on the immediate harm AI could and is causing: “Effective regulation of AI needs grounded science that investigates real harms, not glorified press releases about existential risks”.

Chief among those harms is misinformation. Large language models are only as reliable as the information their algorithms learn from. Human expertise is arguably more important than ever, to create the authoritative and up-to-date information that LLMs can be trained on.

Whilst my simplistic tests of some of the text-based generative AI tools (and those of many others) have revealed these applications to offer solid responses to objective prompts – acting, therefore, like a smarter search engine – they are shown to be wildly inaccurate in responses to subjective prompts. Placing trust in these potentially inaccurate outputs is a huge risk.

Then there is the matter of data protection, intellectual property, and copyright law. The image-generating tools are not creating unique original artworks or illustrative outputs, they are the algorithmic consequence of processing the millions/billions of human-created works that have been scraped together to feed these machines inputs.

There are many more complicated, technical, and human consequences from AI that pose more of a concern than a benefit, not least what happens to the humans doing jobs with duties that are at risk of being replaced, not enhanced, by AI processes? Anecdotally, just from scattered sources of news reporting, it seems many corporations seem to be racing towards the appeal of how these AI products will enable them to reduce expenditure by replacing human efforts with AI solutions, rather than re-deploying those human efforts towards different duties.

Again its hard to qualify the real extent of the risk at this early stage. Not every creative activity that could be replaced by AI technologies necessarily will be. There are probably lessons to draw from history around things like the development of the camera phone and how that impacted on the work of professional photographers. Did it replace their work, diminishing their prospects or did it enhance their work, make their work more appreciated, and just add to the volume of photographs being taken? Disruption is inevitable, but in what form that takes and whom it affects remains uncertain.

I think one of the most interesting dimensions to how AI will proliferate into every day life and every day working practices will be the extent to which it might wear thin in different contexts. Its a tangential case but the recent announcements of supermarkets abandoning their automated self-checkouts points to a possible future when there is a backlash against slick automation and inauthentic experiences.

This could be where we witness generational division, however. I do wonder if my Generation X mindset (and my predisposed leanings towards pre-tech/analogue nostalgia in certain contexts), and those older than me, will embrace developments differently to, let’s say, Millennials and the generations that follow that cohort, who have grown up exposed to or immersed in the digital world from a young age. Not all societies of course have the same access to tech and so, as ever, this new era may be something that remains elusive to many across the developing world.

There’s a further accessibility dimension in respect to pricing. Though a lot of the new AI products are currently available without charge, that will not last. How can it. AI tools are free for now to build up the buzz, to conduct huge testing experiments, but that tap will be closed as soon as the commercial genie is released.

Here are some further references, articles, and remarks about topics linked to the more-negative side of the emerging technological or human consequences of AI.

The Guardian | ‘The Guardian’s approach to Generative AI in journalism’

The Cambridge Dictionary | ‘The Word of the Year 2023 is… hallucinate’

iA | ‘No Feature’

Jon Keegan on Threads | Future of GPT differentiation

This observation notes the tension that to trust the output of ChatGPT – and to make it truly useful – will require trust to be placed in it by users enabling it to access more of our information as its input…

Common Sense Media | ‘Common Sense Media Launches First-Ever AI Ratings System’

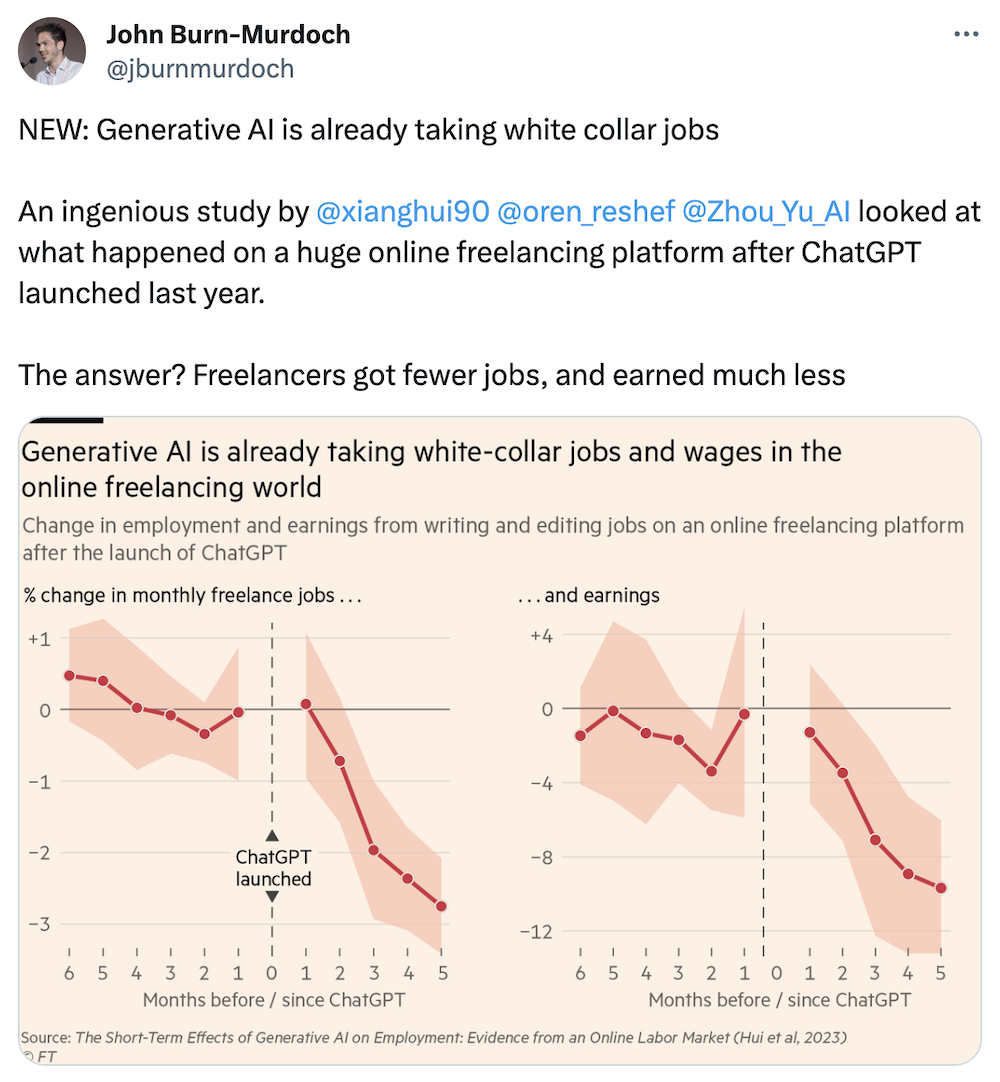

Financial Times | ‘Here’s what we know about generative AI’s impact on white-collar work’ (Twitter thread from John Burn-Murdoch)

New Yorker | ‘A Coder Considers the Waning Days of the Craft’

MSN (via Wayback Machine) | ‘Brandon Hunter useless at 42’ (a now deleted AI generated obituary…)

The Guardian | ‘‘There’s no winning strategy’: the pacy, visually stunning film about the dangers of AI – made by AI’

‘This brings up another interesting ethical element about AI – is it really taking someone’s job when the piece would never have been commissioned in the first place? Warburton points out that there have always been debates about new technologies entering the art world, from the invention of the camera to the emergence of graphic design tools like Adobe. But that doesn’t mean we shouldn’t worry about these latest developments: “I don’t think we’ve seen this kind of smash and grab at this scale before,” he says. “I think this is a very notably different kind of historical event.’